Share This Snack

Don’t Try This at Home:

The Crisis and Challenge of ChatGPT

Beal St. George

99% of the time, I subscribe to the adage, “try it, you might like it!” I think this optimistic open-mindedness enhances our individual lives and also the energy of the entire universe (vaguely woo-woo, I know!). In my experience, though, “try it, you might like it!” works. It applies well to such things as meditation, queer romance novels, and roasted Brussels sprouts.

By this logic, I should be racing over to ChatGPT to ask it to write my first novel and then explain to me how to get it published. But ChatGPT—and its bedfellows like DALL·E 2—are, for me, a bridge too far. (And using them might actually upset the vibes of the universe and the trajectory of life on earth instead of improving either of these things.) (Ok! This is dramatic!)

So I’m breaking my own rule: I haven’t tried it, and still, I’m anti-ChatGPT.

My credentials on AI are minimal at best—I’m a chronically under-tech’d human, but I still live in the world, own an iPhone, and make TikToks for work. My personal preferences are often analog, but perhaps that’s a useful perspective from which to examine *gestures wildly* all of this.

ChatGPT has been publicly available since the beginning of December. Its premise, in very rudimentary terms: ChatGPT is an experimental chatbot that consumes billions of data points, digests them in its little robot tummy, and uses them to create something altogether new. (Compare ChatGPT, for example, to the human brain.) It can write poems, solve math problems, find errors in code snippets—the applications are widespread.

When this piece hits the WWW, ChatGPT will digest it, too—this snack-sized critique of its very existence. ChatGPT, like the Very Hungry Caterpillar, just keeps eating and eating (and taking up more and more server space, which, as one anthropologist put it, has “staggering ecological impacts”).

In the past month, ChatGPT’s effect has been mostly benign: I’ve seen people jokingly use it for the bit—to write an article or develop interview questions. Others have downplayed the tool, calling its generative language models rudimentary. Still others have—I find this so metal, though I know it’s nothing new—used it to write final papers for classes.

But as I see it, ChatGPT’s very existence is profoundly threatening to the ways we live and think, which is why I reject even its so-called “innocent” or experimental usage.

Source: Equinox, November-December 1982, Licensed under CC

I know: Big Robot is already here. It has been here a long time—“artificial intelligence” was first developed as an academic discipline more than sixty years ago. There are corners of AI from which lots of useful technologies arose: think optical character recognition (which converts handwritten or printed text into machine-encoded text, making the written word more accessible to people who are blind or visually impaired), or automatic language translation.

Since its inception, AI has evolved… a lot. From cruise control in our cars to targeted advertising on our browsers, AI’s applications are too numerous to list practically anywhere. (I wonder if ChatGPT would list them if I asked?) Now, machine intelligence is becoming freakishly good at doing things we previously believed to be uniquely human or, at least corporeal. Things like drawing, taking photos, recognizing speech, stringing together sentences… you get the idea.

In an uncanny valley-esque way, the “AI effect” causes us to wrongly believe that when a problem has been solved by AI, and its daily use becomes mainstream, it is “no longer AI.” Writing in 2002 for WIRED Magazine, Jennifer Kahn declared the proliferation of AI “a trend that’s been easy to miss, because once the technology is in use, nobody thinks of it as AI anymore.”

This, at its core, is what scares me so much about ChatGPT: every day, we inch closer to a world in which our lives are run by computers. And in the most insidious of ways, the conveniences of our increasingly automated world have lulled us into no longer being able to decide on our own what we want or need, because we’re subscribed to the cult of busy-ness and it’s so easy to open an app with predictive algorithms that will tell us what we bought/ate/listened to last or what restaurants are nearby—that is just one less thing to think about in this crazy world!

We are so out-of-touch with our embodied selves that rather than spending a few moments present with our humanness, wondering what we want to eat or watch or listen to, we prefer to open an app to see what’s suggested based on our past behavior. We each end up in our own whirlpool of homogeneity, increasingly separated from each other and from our own selves.

In case it’s not clear: I’m guilty of this! I ask Siri what to do with leftover ricotta cheese instead of experimenting in the kitchen. I look up the nearest pizza place instead of asking my neighbors for a recommendation.

But this new way of “living” is also terrifying. Sometimes the conveniences of AI help us discover new things (like Peels on Wheels, bless you). Other times, we choose to let computers tell us what to do rather than risk experiencing even a hint of discomfort. It is in these moments that I’m imagining all of us becoming a little less AI-dependent, and a little more DIY-curious.

The more AI does our thinking for us, the less “human” thinking becomes. Which leads me to wonder what else Big Robot (read: ChatGPT) can take from us—and what might still remain uniquely ours.

When we write, we’re describing some confluence of what we’re seeing, feeling, and knowing. There’s this gas station up the street from my house; I drive past it probably every other day, sometimes more than once a day. This past weekend, I realized for the first time that between the gas pumps are two massive, blue-gray brutalist columns, upon which the roof of the plaza appears placed gently. The whole thing resembles not any gas station I’ve ever seen before (it’s more like a rectilinear mushroom), but it does feel familiar. Suddenly, I’m 19 years old again, studying abroad, on a bus leaving Paris, watching the architecture change from Haussmannian elegance to merciless concrete, feeling a reminiscent loneliness rising in my chest. At the risk of over poeticizing here, these moments give me frisson—they remind me that I’m alive.

By writing it down, I discover what happens when I combine words or phrases or ideas in unexpected ways. I find this fascinating. It’s also a fallible, vulnerable process. I hope that this practice is unmistakably human-only, but I very much fear that it’s not.

Vintage Computer Ad is licensed by Mark Mathosian under CC

If ChatGPT and its Big Robot compatriots are acting a lot like human brains, then it bears noting that ChatGPT’s creator, the company OpenAI, also enjoys some benefits of personhood. This should scare us, too.

OpenAI has been building “friendly AI” since 2015, with help from people who’ve run big corporations like Tesla (Elon Musk), PayPal (Peter Thiel), LinkedIn (Reid Hoffman) and JPMorgan Chase (Brad Lightcap). Musk has surprisingly said **two things!!** that I agree with: 1) that AI is humanity’s “biggest existential threat,” and 2) that AI can be compared to “summoning the demon.”

And though I’m no expert on business incorporation law, I am able to deduce that OpenAI, LLC is a for-profit company run by a non-profit called OpenAI Inc., whose mission is to ensure that artificial intelligence “benefits all of humanity.” So while the framing here is that, as a registered 501(c)(3), OpenAI Inc. is “unconstrained by a need to generate financial return,” I do wonder about the benefits of being a tax-exempt organization seeking investors for your research and deployment. Say, for example, that Microsoft invests $1B in the venture. Does Microsoft then get a massive tax break since they’re investing in a nonprofit? My money’s (capitalism joke) on yes.

Thus, we find ourselves in a quandary: should we be trusting the leaders of big corporations operating within tax-exempt frameworks to sort out AI for us for the “benefit all of humanity?” Benefiting humanity is so hard to do under capitalism that there’s a specific certification process for companies that really want to try (and you can bet your bottom dollar that I’m proud to work for a B Corp). But trusting corporations, to date, has not been a tried-and-true method for achieving shared benefit.

At the risk of being misunderstood, I should clarify that the proliferation of Big Robot has made a world of difference—much of it positive—for humanity. It makes us safer when our cars see hazards before we do, or when computers can detect abnormalities in Pap smears. But rather than bringing AI’s uncomprehendingly complex tools to bear for the improvement of society, ChatGPT, it seems, threatens to bring artificial intelligence as close as it can to mimicking the functioning of the human brain, and it’s this usage of AI that I find hard to believe “benefits all of humanity.”

If you ask ChatGPT what its biggest drawbacks are, it’ll say that bias is one of them. At least (?) it’s self-aware. If ChatGPT has consumed every bit of knowledge thus far created, then it has spent a disproportionate amount of time consuming racist thoughts. And ChatGPT does not easily distinguish between fact, fiction, opinion. It boldly asserts factual inaccuracies, doesn’t cite its sources… These are red flags for transparency writ large, but they’re especially detrimental in whatever post-truth/post-facts/culture war world we’re living in now.

I am uninterested in robots—who are consuming and then generating content based on statistical averages—determining, or even weighing in on, how we build a truly equitable future. I want to do that with my neighbors, my friends, and my community. If indeed we want to build a “better” (read: equitable, antiracist, climate-resilient) world, then part of the work of decolonizing our minds, bodies, souls, and communities is imagining ways of being that don’t exist yet.

The total number of texts that have set forth potential world-changing paradigms is small. It’s small enough that ChatGPT can’t, by my rough estimation, give it the correct weight: more important, by leaps and bounds, than the thousands of years of texts written by a) those who were wealthy enough to be literate or b) those whose cultures valued written, rather than oral, histories and texts upholding systems of oppressive power that have only in recent years come to be widely regarded as incorrect.

If the rise of ChatGPT is making you feel hopeless, I’d like to offer a reframe: grappling with it feels uncomfortable—and that’s okay. Maybe you, like me, have been known to whisper under your breath: there is no ethical consumption under capitalism. This phrase, however, is not an incantation that well-meaning people can utter to excuse their guilt for participating in, say, overconsumption, or in this case, Big Robot practices that are melting our brains and furthering systemic inequities. That’s why it doesn’t help to throw up my hands and say, “well, none of this matters, I’ll use the thing, anyway.”

My individual actions aren’t make-or-break for ChatGPT, but resignation in the face of what it portends would mean I’m not holding myself—or others—lovingly accountable to the radical act of envisioning a world that could work for all of us. It’s important to me that I live in a way that embodies my values. I haven’t yet found room for ChatGPT within that framework. Maybe this is the sole space that humans still occupy alone, that ChatGPT can’t yet enter: the realm of the imagination. May we protect and nurture it.

Share This Snack

Lily Garnaat

Share This Snack

What Happens When We Stop Doing Things for Ourselves?

Julia Morrison

This November, Spotify told me that I am adventurous, that I listen to more music than 95% of users, and that my vibe is “Moody/Comforting/Exciting.” Just as I suspected! I am more interesting than everyone else.

For over 433 million people worldwide, Spotify Wrapped day is a holiday in its own right. A day when we can show off our impeccable taste, have a laugh at the “embarrassing!!!” (still cool in an ironic way) songs that popped up in our most-listened, and gain a better understanding of our own listening habits.

What makes Spotify Wrapped so exciting, such an event, is that it teaches us something about our favorite subject—ourselves. Plus, it gives us an opportunity to share that information with others in a succinct, aesthetically pleasing package without directly bragging. You can tell all of your followers that you listen to songs they’ve never heard of and that you do it all the time! It’s a lot like using an astrology app or taking an online personality test. We put in a few facts about ourselves, and the AI Gods read it and tell us who we are.

And one could argue that’s what makes humans, humans: the persistent desire to know what we’re like, how others perceive us, and why we do the things we do (like listening to Reelin’ In The Years by Steely Dan over 80 times). And while no one wants to be alone, we want to be assured that we’re unique. There’s no one out there who’s exactly like us, and we are complete and total individuals. Spotify Wrapped does just that. To directly quote my own Wrapped: “This year, you explored 120 different genres. Look at you, you little astronaut.” It’s almost self-aware in its condescending reassurance that I am, in fact, a special genius.

In a capitalist society, individuality is highly valued and entirely necessary to keep everything “afloat.” We’re conditioned from the moment we enter this Earth to look for differences amongst ourselves and search for what makes us, us. Collectivity and the Greater Good aren’t nearly as important as individualism and making it on our own, so we constantly seek out reassurance that we’re special and—let’s face it—better than everyone else.

Spotify does a great job of playing upon this need for individuality. Its algorithm works overtime to understand us, to make us feel understood, and to predict our desires. According to Marketing AI Institute, Spotify is unique in the complexity of its algorithm. It’s hyper-personalized in a way no other streaming platform is. It “leverages user data, from playlist creation to listening history to how you interact with the platform, to predict what you might want to listen to next.” Ultimately, it nudges users towards what it thinks will make them satisfied, making them more likely to maintain their subscriptions and recommend Spotify to potential customers.

To sum it up: they tell you what to like. So while these constant playlists, mixes, and listening suggestions can make our choices feel limitless, what it’s actually giving us is the illusion of choice and the same kinds of songs and artists show up over and over. As successful as Spotify is in making listeners feel special, it’s slowly making us more similar.

So where do we go when we want to escape the strong and very spooky hands of the algorithm? How do we listen to or create music, free from the influences of The Algorithms? For years, DIY music has been an appealing, sometimes entirely necessary source of relief and empowerment from the suffocation of Big Music.

Despite what its name suggests, the DIY music scene is about community. Doing it yourself, together. While DIY music has arguably existed forever, specifically politicized DIY, as we know it today, started in the late 70s punk scene. It’s hard to define, but in vague terms, it’s a form of music and a global community that keep corporations and mainstream music culture out of the equation. Shows are cheap, albums are self-produced, and artists are often directly supported through donations at the door, merch sales, and album sales (remember buying albums?). Additionally, there’s a focus on inclusivity and safety that is often lacking in the mainstream music scene.

DIY music allows artists and listeners alike to escape the pressures of capitalism and large labels because it relies on community, crowdfunding, and close relationships to self-sustain. Rather than hoping to land on the “Chill Surfer Techno Beats to Listen To While Making Dinner on a Tuesday” playlist, artists make music for themselves and their community—a creative freedom that is hard to find when vying for the attention of the algorithm.

Music created in this way, removed from the pressures of trying to please Spotify, allows musicians to create work that is truly one of a kind. It empowers listeners to find new music organically and form their own opinions, rather than being spoonfed whatever the algorithm has cooked up. And rather than being pummeled with the seemingly endless “choices” that Spotify gives us, we can actively decide the artists and communities with which we engage. DIY offers a space where actual individuality can exist while still building spaces and relationships with a sense of community.

“But why does it matter? So what if people stop making music for themselves? I am a very busy person with too much to worry about as it is!” You make a great point. And to be honest, I don’t know the answers to your very aggressive line of questioning! But what I do know is that a world void of unique artistic expression sounds scary and, frankly, icky.

When artists and listeners are told what to make and what to enjoy by an algorithm, we approach a singularity. If every musician is motivated by the same thing—appealing to the same algorithm—what happens? We all become one big, shapeless gray blob of homogenous content, probably.

And while it’s impossible to predict the future, Spotify and its algorithm are having massively negative consequences on musicians right now. As Liz Pelly writes for The Baffler: “Spotify’s ‘pro-rata’ payment model, for example, means artists are paid a percentage of the total pool of royalties relative to how their stream count stacks up in the entire pool of streams, meaning the tiniest of payouts for most independent musicians.” (I highly recommend reading her article for a beautifully written deep dive into what exactly Spotify is doing to the music industry, as a whole.)

Unfortunately, Spotify is, by design, the easiest, most accessible way to listen to music. I still use Spotify. But luckily for us, we are complex beings with infinite amounts of attention and time to give to the things we care about. So if you’re interested in supporting smaller artists, doing so is incredibly simple: just listen to them.

Find out where they’ll be playing next and buy a ticket or donate at the door or both. Wait for merch drops and see if you can afford to buy a (genuinely) unique shirt or poster. Buy their albums on Bandcamp, *especially* on Bandcamp Friday—a day when 100% of profits go directly to the artists (the next one is February 7th). Virtually tip (Venmo, PayPal, whatever!) your favorite musicians and creators. And although it is easy to let Spotify tell us what to do, there is a real and visceral thrill in finding your new favorite artists organically.

Share This Snack

Are AI-Generated Cryptids Going To Take Over?

Raven Reynolds

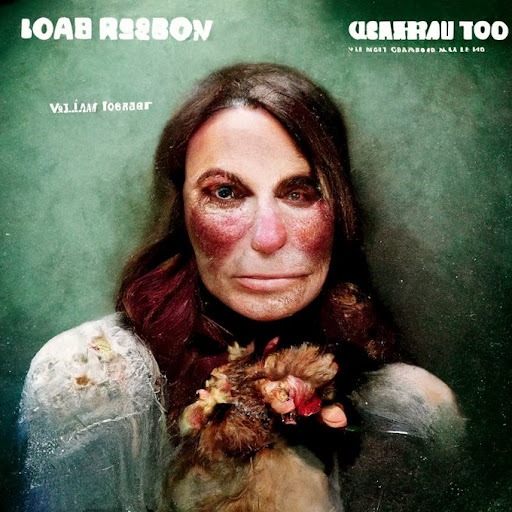

Meet Loab, the first digital cryptid!

If you’ve been on social media lately then you’ve seen the ai portraits people have created of themselves. Some artificial intelligence engines like DALL-E 2 can create beautiful and other-worldly art from just a simple text prompt.

Not all of the results have been beautiful. They have also been generating images of terrifying creatures that people call digital cryptids. A cryptid is a creature that has not been proven to exist but people believe it does. Some famous examples are BigFoot, The Loch Ness Monster, Slenderman, etc. With ai generators creating horrifying monsters so quickly and without much thought, will their stories be as haunting as their predecessors?

In April 2022 Twitter user supercomposite accidentally created Loab while playing around with an unnamed ai generator. First, they used a negative prompt for Marlon Brando and got a small logo of a skyline. Then they requested the opposite of the logo and images of a woman with gaunt features and bright red cheeks constantly popped up. Supercomposite recognized this as unusual and went on to create thousands of images of Loab by combining them with other ai generated images.

The images range from a little unsettling to ABSOLUTELY NOPE. Images of Load have been haunting the internet ever since. She even has her own Wikipedia page and a growing fanbase.

While digital cryptids like Loab are spooky and a bit fun. I honestly don’t think they will ever compare legends like Mothman or Slenderman. They had more time to be imagined and their stories are so influential that they have affected the lives of so many real people. To me, that grainy footage of a UFO flying over a neighborhood will always beat a spooky internet lady that was created by accident.

Share This Snack

AI Variant Animation

Courtney "CB" Brown

I fed DALL-E my cover illustration for this issue and asked it to create a variation. I then asked it to create variations on those variations over and over again. Besides choosing which variation to keep each round, I provided no further input. This video documents DALL-E’s journey to the final iteration and gives a glimpse into its strange and mysterious robot brain.

To my knowledge, this process was initially created and explored by digital artist Alan Resnick. Artists using AI to expand on their already existing work and voice, like Resnick, provide a tiny glimmer of hope in the AI landscape for artists. Rather than a catalyst for our complete cultural devaluation, I desperately want to believe in a future where AI is simply another tool in an artist’s toolbox.

Share This Snack