Did you know that the technologies we use every day often perpetuate racism? Here’s why that matters, and the best ways we can address it.

Wait, technology can be racist? Well, as it turns out, yes. Technology is predominantly designed, tested, and created by white folks—human beings who all function in a world where whiteness and systemic racism are the default. Humans who are inherently biased.

Here are just 4 examples of the racial bias in media technology, what it means for us IRL, and how all of us (spoiler alert: you don’t have to be a tech expert!) can combat it. Be sure to check out the articles below to learn more.

From new clothes to astrological memes, we’re constantly bombarded with content that algorithms think we’ll like. But on the flip side, algorithms can also create echo chambers of people and content. It’s not as hard as it sounds to end up in a rabbit hole of all-white creators and even Neo-Nazi propaganda. Predictive technology can perpetuate our own (and society’s) biases, particularly anti-Black bias. As Lung Ntila puts it, algorithms “are, in short, the technological manifestations of America’s anti-Black zeitgeist.” Scrolling through social media that is controlled by discriminatory algorithms has real-life, long-term negative effects on the mental health of youth of color.

When it comes to our own timelines and feeds, the best way to challenge these biases is pretty simple:: follow more Black and brown creators and influencers. Not only does this amplify their impact online, but it also diversifies the content you’ll see every day.

Check out this New York Times podcast, Rabbit Hole, for more information.

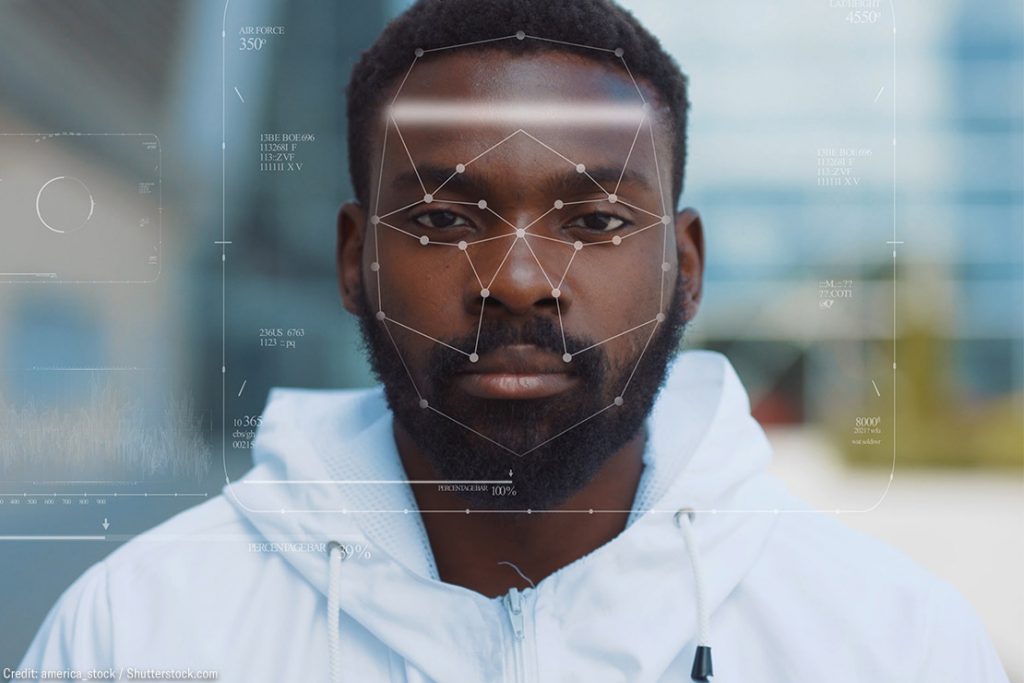

Facial recognition is more than just a fast way to unlock our iPhones. It’s frequently used by law enforcement to identify and locate potential suspects. However, this technology frequently misidentifies Black and brown folks—10 – 100x more frequently than white folks.

The solution to this problem lies in the hands of tech companies, and it comes down to this: we can think more critically and include richer data when implementing this software. Charlton McIlwain got right to the heart of it: “We must deeply engage the historical and present realities of race, racial discrimination, and the disparate impacts of race that play out in virtually every social domain.”

To learn more, check out this article from the ACLU about facial recognition and its contribution to the policing and surveillance of black people.

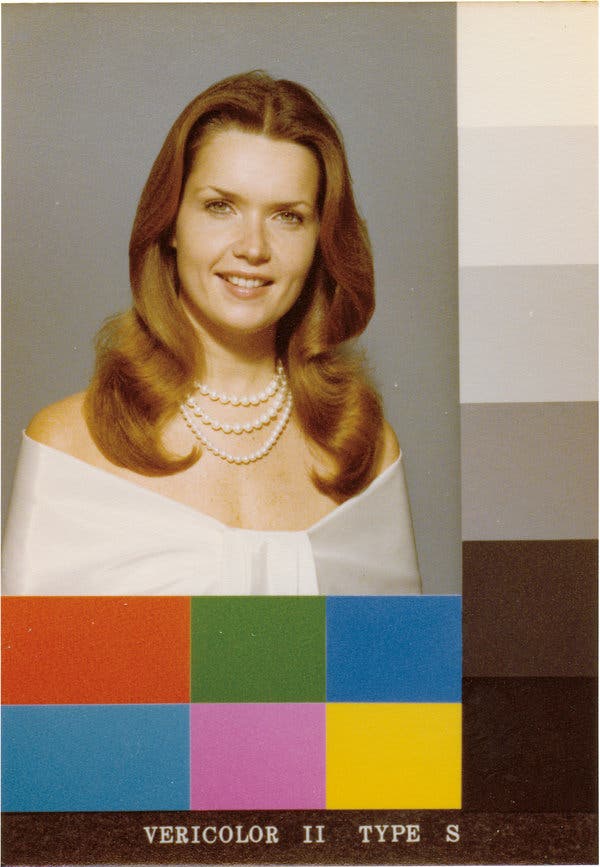

Photos don’t lie, right? Well…not exactly. Until quite recently, photographs of models were developed based on the tones and colors in an image of a white woman, called a “Shirley Card.”

The implication? Many photographers, until recently, were unable to accurately capture darker skin tones in images. “The result was film emulsion technology that still carried over the social bias of earlier photographic conventions,” explains Harvard professor Sarah Lewis in this essay.

The solution for photographers and creators is simple: learn how to shoot and edit darker skin tones. Check out the countless tutorials on YouTube and Adobe.

Social media platforms such as Facebook have recently implemented restrictions on ad targeting. Why? Until recently, advertisers could target individuals based on their race when creating ads for things like housing, employment, and credit. Although this kind of straightforward discrimination is no longer allowed via Facebook, there are countless sneaky ways advertisers can target users based on race and income, like targeting certain ZIP codes, job titles, and interests.

So what can advertisers do? This might sound familiar: we can do more research. Using market research to make sure the audiences we build are inclusive, or even broadening ad audiences, are a few places to start.

To dig in further, check out this article from Wired.

So, How Can You Help?

Dismantling technological racism is hard work—but it’s work that all of us can (and must!) engage in every day. It looks like an overhaul of the world of technology as we know it—from encouraging our legal system to take on the exponential growth of AI technology, to making the tech world more welcoming to and representative of people of color, to recognizing and welcoming more diverse voices in tech, for starters.

That means thinking critically about the ways racial bias can affect our content, committing to anti-racism work, asking hard questions, dedicating ourselves to amplifying the voices of Black and brown creators and designers, and hiring and retaining people of color in media and technology positions.